With a plethora of digital media channels at our disposal and new ones on the way every day(!), how do you prioritize your efforts?

With a plethora of digital media channels at our disposal and new ones on the way every day(!), how do you prioritize your efforts?

How do you figure out which channels to invest in more and which to kill?

How do you figure out if you are spending more money reaching the exact same current or prospective customers multiple times?

How do you get over the frustration of having done attribution modeling and realizing that it is not even remotely the solution to your challenge of using multiple media channels?

Oh, and the killer question… if you invest in multiple channels, how much incrementality does each channel bring to your bottom-line?

Smart Marketers ask themselves these questions very frequently. Primarily because we don't live in a let's buy prime time television ads on all three channels and reach 98% of the audience world any more.

We have to do Search Engine Optimization. We have to do Email Marketing. We have to do Paid Search. We have to have a robust Affiliate network. We have to do Social Media. We have to do location-based advertising to squarefour people. We can't forget Mobile advertising. We have to… the list is almost endless. Oh, and in case you had not noticed… the real world is still there. TV and radio and print and… Oh my!

The reality is that we can't do all of those things.

Smart Marketers work hard to ensure that their digital marketing and advertising efforts are focused on the most impactful portfolio of channels. Maybe it is Search, Email and Facebook. Maybe it is Affiliate and Paid Search. Maybe TV and Twitter and Newspapers. Maybe it is five other things.

How does one figure it out?

Controlled experiments!

What's that? This: You understand all the environmental variables currently in play, you carefully choose more than one group of "like type" subjects, you expose them to a different mix of media, measure differences in outcomes, prove / disprove your hypothesis (DO FACEBOOK NOW!!!), ask for a raise.

It is that simple.

Okay, it is not simple.

You need people with deep skills in Scientific Method, Design of Experiments, and Statistical Analysis. You need the support of the top and bottom and middle of your organization (and your agency!). You need to understand all the environmental variables in play (a hard thing under any scenario) not just in context of your company but also as they relate to your competitors and ecosystem.

But if you have access to some or all of that (or can hire good external consultants), then your rewards will be very close to entering heaven. Marketing heaven that is.

To make the case for controlled experiments I want to share with you one simple, real world example I was involved with.

My explicit agenda is to spark an understanding of the value of even simple controlled experiments (that might need only some of the horsepower mentioned above).

My secret agenda is to illuminate the power of this delightful methodology via a simple example, and get you to invest in what's needed to move from good to magnificent.

Ready?

The Setup.

This is a multi-channel example. The company truly has a portfolio strategy when it comes to marketing. We are going to simplify the example to make it seem like they only do two things. They mail catalogs and they send emails. The purpose of both is also simple: Get people to convert online (website) or offline (call center).

The question was, should they do both? Should they love one more than the other? Is this digital thing here to stay or is the thing that has worked so well for 150 years – catalogs – the thing that still works ("the internet is a fad!")? What is the incremental value of doing email?

To answer this question the company took their customer lists (catalog and email) and identified like-type customers. Like-type as in customers that share certain common attributes. For your business, that could be people who have been customers for 5 years (or 5 months) or those that order only women's underwear or those that live in states that start with W or those that order more than 10 times a year or only men or people who were born on Jupiter or… (this is where design of experiments comes in handy :).

Then they isolated regions of the country (by city, zip, state, dma pick your fave) into test and control regions.

People in the test regions will participate in our hypothesis testing. For people in the control region, nothing changes.

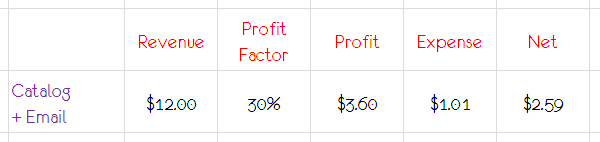

It is also important to point out that I am keeping the data simple purely to keep communication of the story straightforward. We'll measure Revenue, Profit (the money we make less cost of goods sold), Expense (cost of campaign), Net (bottom-line impact).

What is missing in these numbers is the cost of…. well you. The people. A little army in your company runs the TV campaigns. A larger army is the catalog sending machine. A lone intern is your email campaign people cost. A team of five are your paid search samurais. When you do this, if you can, include that expense as well.

Enough talk, let's play ball!

The Experiment and Results.

Here's the outcomes data for the control version of the experiment. This group of customers was sent both the catalog and the email. Nothing was changed for them – this group was treated exactly as they were in the past.

If the company did both things, revenue was $12.

Because revenue is very often a misleading way to understand impact on the company's bottom-line, most smart people prefer to go for net impact (the result of taking out cost of goods, campaign expenses etc.).

In this case, that amounted to a bottom-line impact of $2.59.

[If you want to learn how a focus on the bottom-line, especially net profit can change your life, and I mean that literally, please see this video: Agile, Outcomes Driven, Digital Advertising. Parts two and three, Rockin' Teen and Adult (Ninja!).]

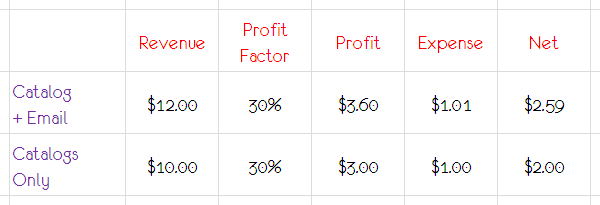

Here's the data for variation #1 of the experiment… this group of like-type of customers were only sent the catalog – no email. The marketing messaging and timing and all other signals for relevancy and offers used for this group was exactly the same as the control group.

Compared to the control group, revenue went from $12 to $10. Company expense went down a little bit (email campaigns after all are not free).

The net impact went down from $2.59 to $2.00.

17% reduction in revenue, 23% negative net impact to the bottom-line.

Does that help you understand the incrementality delivered by the campaign that is missing in this variation of the experiment (email in this case)? You betcha!

No politics. No VP of Email vs VP of Catalog egos and opinions involved. No you are trying to mess with my budget spit on your face. No but that is not what Guru x at a conference said or but that is not what people on Twitter think. None of that. Just data.

How sweet is that?

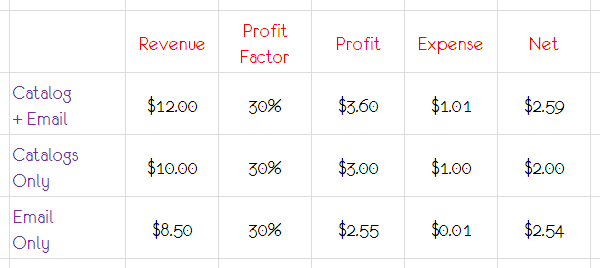

Here are the results of variation #2… this group just got the email. The killing of trees, filling of recycle bins, and breaking the backs of postal carriers was paused. :)

Again, and I can't stress this enough, all else was equal.

Compared to our control group there was a whopping 29% reduction in revenue. OMG!

But, a bigger OMG is coming: the net impact on the bottom-line of the company was a measly 2%! OMG!!

So the incremental value delivered by combining a catalog campaign with an email campaign is an increase of 2% on the bottom-line of this company.

Not for every company on the planet. Not even for all campaigns you do. But for this campaign and these types of customers you can confidently say: "Yes there was a drop in revenue and if you care about that, oh beloved HiPPO, then let's send more catalogs. But at least now you know the net incrementalism delivered to our bottom-line from doing that."

If your HiPPO is smart, and in my experience many HiPPOs are smart and well-meaning, shewill ask you this: "Is that 2% ($0.05) sufficient to cover the salaries, pensions, health benefits of everyone we employ to do catalog marketing?"

Controlled experiments also allow us (Analysis Ninjas) to do some subversive work. A question that came to my mind was: What is the incrementality of doing any marketing at all? What would happen if we do nothing, and we retire all our marketing people? Would the company go under?

Now it is rare that questions like those get asked. But it is too tempting not to use this methodology to get a sense for what the answers might be.

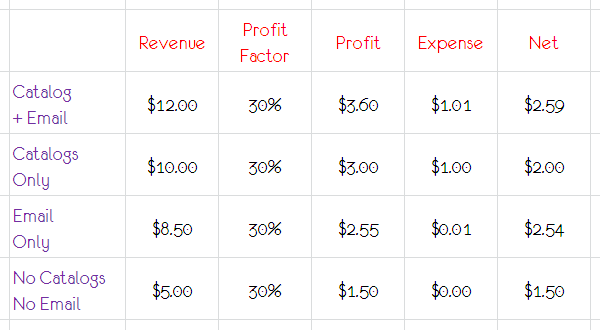

So for variation #3, no catalogs or email were sent to the customers in the test group. Here are the results…

It turns out if you completely stop marketing, and you are an established company, the impact is not that your revenue goes to zero! :)

Revenue in this variation went down 58% (pretty big). The impact on net to the bottom-line was a reduction of 42%. Both not great, but not zero.

So now you have some sense of what is the incrementality of all the people in marketing (their salaries, pensions, expenses etc.), and what you have to compute is if it is less than or greater than $1.09 (the loss in net impact).

Talking just a smidgen more seriously, eliminating catalogs and emails (and all marketing) might not make the company bankrupt immediately. But that is simply an outcome within the confines of this experiment. And it is easy to imagine how the impact might just get worse over time. The nice thing is that you can also test that!

Good lord I love this stuff!

The Lessons from this Controlled Experiment.

It is possible to compute incrementality of adding or removing marketing strategies.

It is possible to go back and use this incrementality to make solid, long-term new decisions for the business (and not to keep doing what you have forever until your business goes bankrupt).

It is possible to take politics, bickering, back stabbing and all that ridiculous stuff out of the picture. Okay maybe not all of it, but a lot of it.

It is possible to determine the value of doing Paid Search campaigns for brand terms where you already rank #1 via SEO. It is possible to understand if you should invest in Facebook at all. It is possible to understand how much to support your TV campaigns via Yahoo! display campaigns. It is possible to specifically nail down every incremental dollar added to the bottom-line of adding YouTube to your Search campaigns and then adding radio campaigns and then adding magazine ads and then adding Twitter. And along that chain it is possible to understand exactly when you've reached diminishing margins of return!

Important: The lesson you should not take from this is that catalogs don't work. They may work for you, they may not. All you should take away are the possibilities outlined above.

Closing Thoughts.

Here are some important bits of context, and a few more lessons I've learned from having done this a bunch of times…

* The results you see above are raw end results. The team did the normal modeling to ensure that the results were statistically significant (large enough sample set, sufficient number of conversions in each variation).

* It is not always easy to get exact replica (like type) customer sets. There are always things that are a little bit beyond your control. Do the best you can.

* Work as hard as you can, and then some, to ensure that there are as few "disturbances" in your test and control group. In the middle of the experiment don't start a new paid search campaign or tweeting like a crazy duck to the same set of customers. Shout loudly until the entire company knows what you are up to (and beg for their co-operation).

* No answer is ever definitive, so act on the results immediately.

* In the same spirit the best companies in the world know that you are in a constant testing mode. There are so many factors that can affect your results. Seasonality, shifting consumer behavior, competitive landscape changes, disruptive product introductions, new technologies, legalization of illegal things, so many more things.

So you test, learn, rinse, repeat, become awesomer.

If you want to learn more about controlled experiments, and see more examples and a case study, please jump to Chapter 7 and page number 205 in your copy of Web Analytics 2.0.

Bonus: Here's one of my favorite articles… all the way from 2007 but chock full of pithy valuable lessons for all of us regardless of our field: 41 Timeless Ways to Screw Up Direct Marketing

Bonus 2: Google Analytics has a wonderful set of reports called Multi-Channel Funnels. They are very good at showing how many outcomes are delivered via multiple media channels (say search + Facebook + display campaigns vs search only). They are also very good at telling you the order in which these channels were exposed to the person. It is important to know this is happening, and how much. Mutichannel funnel reports won't answer the questions at the top of this report. It might tell you how urgent it is to answer them (see this video, min 21 onwards: Google Analytics Visits Change). Even if you use other dedicated tools in the market that do "attribution modeling" you still won't get the precise answers you need to optimize your channels. Your only path out? Controlled experiments. Go back up and read this post again. :)

Okay it’s your turn now.

Are controlled experiments a part of your marketing and analytics portfolio? If yes, would you share one that perhaps was your favorite? If no, what are the barriers to adopting them in your company? Having read this post what might be the biggest downside to experimentation? What do you find exciting?

Please share your feedback, excitement (or lack there-of), life lessons via comments.

Thanks.

Via

Via

A great and inspiring article Avinash!

Can you share some thoughts how to set up experiments for online media mix modeling?

I've seen you write about it in your book 'Web Analytics 2.0', however I'm struggling how to set it up. Especially because we're talking about visitors, without knowing anything about the visitor itself.

Flapdrol: I wish that I could provide a pithy answer about setting up a test, it is complex. While doing the test I suggest in this post is a little simpler, it still requires some amount of expertise in design of experiments etc.

There are links in the post you can click on to read. I would also suggest that if you don't have the expertise inhouse then to get it from the outside.

A quick tip: There is a huge difference between doing online A/B test and Multivariate Experiments. You want someone with expertise in Controlled Experiments specifically. I've had a lot of luck working with offline Direct Marketers, they have been doing this since the time of Adam and Eve.

Avinash.

My addition to the post – check out how GA seamlessly handles attribution to your marketing channels, i.e., all the touch points you have with your customer.

http://analytics.blogspot.com/2011/08/introducing-multi-channel-funnels.html

Awesome post.

As obvious a great post by you.

Well said- "No answer is ever definitive, so act on the results immediately."

Great post and a bit scary for the marketing people :-)

Billig: I hope Marketers are scared. But I also hope that they'll see that there is an opportunity here to do a lot better what they do every day.

There is a path here to do the almost impossible three things: 1. Make a lot more money for their companies 2. Delight their customers like never before (no more spamming them with irrelevant stuff) 3. Increase their own salaries.

Worth being scared about right. :)

-Avinash.

Once again, Avinash, your idea seems so simple, yet implementing it is the tricky part. I'm sure a lot of organizations wouldn't let their Analysis Ninjas go as far as to "disrupt" business long enough to create these experiments. It can be a big pill to swallow to anything different, let alone a major shift involving marketing mixes and customers of a given region, etc.

I started small in my organization. I run organic search, while my pal/fellow aspiring Ninja runs paid search. We had all the buy-in we needed between the two of us to experiment with the relationships between paid and organic search and the proverbial shelf space. We have nothing concrete to report yet, but the experiment has been designed and the cake is in the oven. Now it's just a matter of sporting our lab coats and waiting to exclaim "Eureka!"

Josh: It is very hard to do simple things. Here is one of my all time favorite quotes:

Nice, eh?

Controlled experiments require a lot of love, passion, patience, math and loads of willingness to want o be better. Many companies fail on that last point. They are looking for simple solutions to complex problems. Quick fixes. What they fail to understand is that if solutions to complex problems were easy then everyone would be doing them!

But all the more reason for you and I and our beloved Occam's Razor community to do it. We'll build a competitive advantage for ourselves and one day, deservedly, rule the world! :)

I am so glad that your experiment is in the works. All the best!

-Avinash.

now you made it again! this quote is the story of my life.

speaking of your post, i love the fact that it's so easy to conduct controlled experiments with ppc… do expensive ppc broad keywords really fuel brand and long tail search? or are they the catalogues of our days? :)

thanks!

Good lord I love this stuff too.

Thanks for another very insightful post.

If your company uses more than 1 marketing channel, this is the only way to measure results accurately. I realize a lot of people may not like this idea or accept it, but it's reality and established best practice in all the other measurement worlds other than web analytics (ever heard of a placebo in drug testing?).

Now, if we could only get all the marketing providers to fully embrace this reality ;) Some of the email folks have in fact made great strides in this area recently.

Re: GA / multi-channel funnels, this is also a good time / place to point out that just because someone was *exposed* to marketing does not mean it had any incremental effect, and this truism is at the very core of Avinash's post. Multi-channel funnels, by themselves, do not solve this problem, they simply show a sequence of events.

The question controlled testing answers is this: Which events in the sequence are the most powerful in terms of driving sales and profits?

"Multi-channel funnels, by themselves, do not solve this problem, they simply show a sequence of events."

Could not agree more. In fact, let me up the ante – single channel funnel don't show incremental impact, either.

Have plenty of examples.

Avinash,

Experimental Design (Test/Control) methodologies-some dating as far back as the nineteenth century have been used across many fields, used in traditional marketing for years and their applications still hold true for various digital and traditional marketing tests.

One thing I would like to add: For new analyst who have just started their career in traditional/digital marketing analytics Test and Control design is very important to understand. Test/Control Design is a real application of statistics in marketing.

1) As you mentioned in your closing thoughts, once you get response rates for different groups then you check the statistical significance using t-test/test of binomial proportion etc. depending on design setup.

2) How big should the sample size be to make the experiment valid? The answer depends on expected response rate; based on the results of past marketing; the expected variation among subgroups of the market; and the complexity of design, including number of attributes and levels.

3) Statisticians also use Logistic regression model for experimental design as well. For example: In credit card marketing the possible combinations of brands, cobrands, annual percentage rates, teaser rates, marketing messages and mail packaging can quickly add up to hundreds of thousands of possible bundles of attributes. Clearly you cannot test them all. Using Logistic regression model to experimental design methodology a statistician can help the marketer boost ROI and conduct test using few attributes and apply the model equation to cells already tested. Using Logistic regression to Test/Control design, tester ends up with a full set of consistent results for all possible permutations. Boost your Marketing ROI with Experimental Design (http://hbr.org/product/boost-your-marketing-roi-with-experimental-design/an/R0109K-PDF-ENG) is an excellent easy to read case study from Harvard Business Review. I found a free version here.

http://www.peerengage.com/downloads/Boost_ROI.pdf

Thanks for the great post.

Shilpa Gupta.

Great post!

It was story about sucking. All channels suck alone but when they are combined they suck less.

How to prioritize your efforts, that is THE question… sometimes I feel I spend more time stumbling around trying to figure out where my energy is best spent than actually doing anything productive.

I'm not an analyst or anything close to. I had an idea for a website that I thought would be simple thing to explain to a dev. Apparently not (it's partly there but it is not what I envisioned – yes I know all about dev's complaining about pesky clients who don't understand what they are asking). Then I consulted SEO experts, and quickly found out that there is but a google search between what many of them know and what I can find out for free. So I started doing much more of my own research (which is how I came across your absolutely brilliant blog). It's a very steep learning curve, and when so many options open, you almost become paralysed (Lions AND tigers AND bears, oh my and, and, and).

With hindsight, I would have done things very differently from the beginning – from an SEO point my database is a disaster. Alas my financial resources have been stretched too far for a complete redo, so I'm trying to make it work (it may be taking a duck to eagle school – we shall see) I handle ALL aspects of blog and site other than programming, and it's overwhelming. You have set me straight on this at least: Clearly I need to pick some angles and then do what I have avoided – controlled experiments.

Thank-you for explaining things so well that even where I do not understand all of it, I understand enough to at least have direction.

Hi Avinash,

according to your data there are some interesting conclusions:

NET income / Revenue Rate

E-mail + Catalouge 2,59 / 12,00 = 21,58%

Only Catalouge 2,00 / 10,00 = 20,00%

Only e-mail 2,54 / 8,50 = 31,75%

NO e-mail nor Catalouge 1,50 / 5,00 = 30,00%

The best ROI for this Company is to stop advertising themselves. Right?

Greetings from Croatia :))

Hi Avinash,

the net income / revenue rate is the percentage of revenue we've actually earned. If this rate is 20% that means that from every 1$ revenue we have earned 0,20$

mvarga — good job taking the analysis one step further. You discovered one of the fascinating findings that people uncover when they do this type of work. You learned that there are advertising channels that generate incremental sales, but do so at a low rate of profit (or low ROI rate). About 15 years ago, as Manager of Analytical Services, I presented data just like this, and my VP got angry with me, telling me that "percentages don't pay the bills". In other words, he was fine with an advertising channel that had a low ROI as long as it generated sales dollars and profit dollars.

When I work with CEOs, I give them something additional to think about. I focus them on the comparison between email only and catalogs + email. Notice that the revenue column is very different, but the profit column is very similar. I ask the CEO to think about all of the company resources that are required to generate the $3.50 of revenue at only $0.05 profit. I ask the CEO how the catalog dollars might be spent differently? Could the money be invested elsewhere? How about all of the staff requied to generate $3.50 of revenue that only generates $0.05 profit, could those employees be used elsewhere at a better return on investment?

Usually, this discussion results in a different investment strategy … the CEO isn't thrilled with $3.50 revenue that only yields $0.05 profit.

This is the magic of the style of testing that Avinash advocates. As Shilpa said, this type of testing has been around for decades/centuries … but it is under-leveraged. And as Jim Novo says, you will come to the wrong conclusion using any attribution model … attribution models will tell you that catalogs and emails work together to yield a positive result, while Avinash demonstrated here that there are strategic considerations regarding the effectiveness of catalog marketing that the CEO must know about.

Web Analytics professionals have such a huge opportunity to take advantage of this methodology. You are smart, smart people. Take advantage of what Avinash is sharing with you!

Thanks for the Valuable information Avinash!!!

Usually, most of the marketers are fear about experiments that if something went wrong. In this digital world marketers should be proactive and creative while reaching/engaging the customers.

One thing I may add as a warning of caution is that net income is not always the best indicator of a campaigns success. That is, within a reasonable time frame. The example of the catalog & email are both to an existing customer base, where its fine to use profitability as the ultimate gauge of success. In the case of customer acquisition it may not be prudent to only look at the bottom line, at least in the short term. Its just something that needs to be built in to your tests, certainly not a reason to not test :)

Where this method starts to get fuzzy for me is with branding efforts. How can I measure the effectiveness of a given magazine ad when I have 100+ ads running throughout the year? How can I measure the value of a Facebook fan for my brand? Both are examples I'm struggling with measuring. If you could point me to a resource to help answer these burning questions I'd be willing to send you a whole bushel of apples. Granny smith, red delicious, you name it. A whole bushel.

ave: Good point.

The nice thing is that "I want to acquire customers" or "this is a branding campaign" are no longer valid excuses for not measuring what works for a company. Both of those things can be measured, if not in terms of net impact then in terms of the Economic Value added. [Branding Marketing Metrics. Customer Lifetime Value.]

We can further undertake longer term studies to compute customer lifetime value of customers acquired via / exposed to above campaigns and still confirm what the incremental value is of those campaigns. The bigger the company (proxy for the more money they spend on marketing) the easier this is to do.

Regarding value of a fan…. I experiment with participation in social channels all the time and try to tie that participation to Per Visit Goal Value or Economic Value added as a first cut. Should I even be there or not. If you are lucky to have a integrated customer database then it becomes easier to create a group of customers who are fans of the brand on a social channel and analyze their behavior over a period of time. That should get you going. :)

It is not easy, but doable.

Avinash.

Avinash,

Loved this post for its value and especially because I totally believe in controlled experiments – I think they are great and a reliable way to test/evaluate your ideas.

The one thing I do stress very much (and you underlined it as well) is that it has to be set up properly so that no unnecessary biases pollute the results and the conclusions (or causality) drawn from it. Considering that many business scenarios (like test markets) will also likely be quasi-experimental, I completely agree with your point about asking for help from someone grounded in research design.

Even with that said, I agree with you that this methodology is a great way to study bottom-line impacts of variants and move forward in the path of optimal decision making.

Great read.

Regards,

Ned

Taking into account some of the comments about marketing and business culture above, I’m wondering if it might be worth it to re-frame this discussion a bit.

Speaking as a measurement-oriented marketer, I have always viewed success measurement as a continuum of “confidence”. Not statistical confidence per se, but a similar idea; how confident am I that the success measurement truly represents the actual outcome? If I had to bet my own money (as I do in my own web businesses) across various marketing efforts based on the results of testing, what criteria do I use?

The criteria I use is a duality: more than just the test results; it's how confident I am that the test results truly represent the actual outcome. And this confidence is really based on the test design. The controlled test approach described above is one I am very highly confident in, because it comes from the heart of the scientific method.

It’s not just the “science” though. What’s really important is this: I have seen controlled testing *disprove* so many theories about what actually works in marketing. Often in completely mind-blowing ways that totally change the way people think about the business and their customers.

So as an analyst, I think you have a responsibility to point out test design issues to marketing people, because the analyst’s role is not to “justify”, it’s to analyze. In my mind, the importance of doing this varies directly to the size of the investment and how critical the program is to the company.

If you are involved in the success measurement of an important program, it’s your duty to point out that there may be “better ways” to measure the outcome of these programs, and explain the controlled testing idea (or other ideas). How confident does the marketer want to be that the right decision is being made? If they choose not to be as confident as possible, that’s their problem.

But to let a marketer have false confidence in an outcome is the analyst’s problem.

Is it a tricky discussion? Sure. As pointed out above, a controlled test often involves “giving up” some sales in the short term for supremely confident knowledge about what actually works and how well it works, a tough call that probably needs some management buy in. But the scale is often awesome: you give up $20,000 in sales this month and make an extra $500,000 in profit the following 6 months. Management tends to care a lot about ideas like that.

Or maybe they don’t. But this is not your call, it’s a management decision, right?

Analysts should at least give management the opportunity to make this call.

Thoughts?

Jim: Amen!

I love the thought that an Analyst's role is "not to justify, it's to analyze." I suppose the analysis we present so often seems to be counter intuitive or goes against "commonly held wisdom" that we shift into justification mode.

That discussion leads to the importance of understanding people and their psychology and having that as important context when presenting analysis. We are not shrinks, but it does not hurt to know what motivations are at play at the other end (in the recipients of our analysis).

-Avinash.

I think having controlled experiments are necessary in any environment. I think the biggest factor is not asking if we should do controlled experiments, rather convincing top level organizations that it is important to do extensive experiments like controlled experiments, instead of the usual mass test and decide from that one test.

Great post Avinash.

Controlled experiments are not a big part of my marketing and analytics portfolio because the company is small and serviced based. Controlled Experiments would be awesome for someone selling a tangible, but we ship cars overseas. I would use controlled experiments like A/B testing or something web based, but nothing tangible like catalogs or brochures. Needless to say, experimenting (testing) is something that can show me change.

Wonderful Post.

Currently I think the simplest controlled experiment, often referred to as an A/B test, this one is quite interesting. This concept is easy to understand and vital thing is that Avinash share here which is rarely discussed. Current market trends are really competitive and we require some boosting factor in it. These will help many experimenters for measure their results and productivity.

For the sake of clarity, would just like to point out that the experiments Avinash is describing are *not* A/B tests as commonly thought of in web analytics. They could be called A/Null tests, testing the *absence* of a campaign, not multiple versions of a campaign. This is a very important difference.

If you look at the last example, $5 in revenue was generated with no campaigns at all. This is the core of the argument, that if $5 in revenue was generated with no campaigns, and you add a campaign such as email that generates $8.50 in revenue, you can only credit $3.50 to email ($8.50 – $5 = $3.50). This is why you have a control group that receives no campaign, to get a baseline to measure against.

And it's why the word "incremental" is used to describe this approach; it measures what a campaign *adds* to revenue. It's the most honest assessment of campaign value. This concept is particularly important with interactive media, and especially so if you believe in the value of "customer experience", because at least part of this $5 generated by the group with no campaigns is due to good customer experience, it's the value of "loyalty".

So, this value should not be credited to a campaign that does not generate it.

Jim: Such an important clarification, thank you so much for making it so eloquently.

I am so appreciative of your insightful comments here as I have learned so much from you over the years.

Arigato!

Avinash.

Good article overall and one obviously needs to measure ROI, but unfortunately too many people focus on the immediate numerical outcomes themselves and not on the causal factors or big picture. Some examples follow.

Causal factors (any one of which could invalidate the test):

• Was the driver poor creative, merchandise selection, pricing, etc. of the advertised items? Poor appeal – poor lift.

• Was the timing of email and/or the catalog mailing a contributing factor? i.e., if they had waited another 6 months, perhaps the catalog would have performed better! What about cadence? Are emails so frequent so the net gain from each is low?

• Was a competitor advertising similar items at a better price near the same time undercutting the campaign performance?

• In addition, I could point out several likely causal/contributing issues (even with the test design and set-up described) that could have driven results: I see poor design skewing so-called ‘test’ results FAR too often… So little rigor is typically performed here that it’s garbage-in, garbage-out and I’ve developed analyses to mathematically match test and control cells for clients to ensure that this is not a driving factor.

Big Picture:

• Incrementality was low, but doing nothing is not likely to be a viable long-term business strategy: The control customers (no catalogs or email) are likely still purchasing based upon the residual impact of previous communications.

• What is the longer-term impact of the campaign and what other business factors were affected? Myriad research studies point out that measuring advertising’s short-term results does not adequately represent the full ROI perspective nor account for the branding impact, etc. – there’s so much support here that I wrote a presentation on the value of advertising/branding (sorry, not for distribution but there’s plenty of publicly-available evidence).

• Similarly, what is the customer lifetime value (CLV) of the buyers reached with the campaign, etc., etc.

Net: Proper set-up is often avoided, but mandatory for meaningful results. Due diligence is required to evaluate potential causal factors and to determine WHY the results are what they are. Finally, think big picture and measure longer-term to ensure you’re capturing the full ROI and ROO (return on objectives) of your campaigns.

Steve: Thank you so much for adding your thoughts on things to worry about. I concur with you on most of your thoughts below.

My stress on hiring someone with background in Scientific Method and DOE was to ensure that to the extent possible we avoid some of the silly mistakes. My, perhaps even deeper stress at the end of the article, on taking action quickly as "no insights last forever" was precisely not to take what comes of controlled experiments as the permanent world of God. We experiment. We learn. We take action. Rinse and repeat. :)

Avinash.

Avinash

I appreciate how measured the article is and explicit about uncertainties. If I may add more qualifiers

1. We are paid big bucks for making better and plausible hypotheses and for the right experiment design. While every hypothesis can be tested, we need to apply mind, prior experience, or in some cases even gut feel to determine those worth testing. When we run multiple experiments for testing trivial hypotheses (like color or font used in mail campaigns) we will get spurious results. A funny but very clever illustration of this comes to us from xkcd http://xkcd.com/882/

2. Your statement, "So you test, learn, rinse, repeat, become awesomer.", could be interpreted as "more of the same tests". The purpose of experimentation is to improve uncertainty in our decision making. There should be incremental learning from each experiment run.

3. The scope of the findings and how widely applicable they are is very relevant. We should be careful in extending the results from testing one segment and applying it another.

4. Specifically regatding the catalog example, beware of hidden hypotheses that we take for granted. In a catalog that lists many different SKUs of different price ranges, the hidden hypothesis that is treated as true is, "preference for email or mail catalog is the same across the price range".

5. When using tests for statistical significance, beware of large sample bias. In large samples (samples > 300) Small statistical anomalies will get magnified and will look statistically significant. One way to fix this is to pick random sample from each group and test for significance rather than against entire groups'.

6. Lastly, controlled experiments only tell that the 'treatment' made a difference. But they do not tell 'Why'.

-Rags Srinivasan

I am a computing science graduate and work closely with different marketing organisations as a software consultant. I find it absolutely incredible that, 90 years after Claude C. Hopkins wrote his "Scientific Advertising", the methods you describe are still largely unfamiliar to a large group of marketing professionals.

To quote Hopkins:

"Scientific advertising is impossible with [knowing your results]. So is safe advertising. So is maximum profit.

Groping in the dark in this field has probably cost enough money to pay the national debt. That is what has filled the advertising graveyards. That is what has discouraged thousands who could profit in this field. And the dawn of knowledge is what is bringing a new day in the advertising world."

All of this is not new. Even applying these techniques to marketing has been around for almost a century. How is it that it still requires explanation?

Lukas: I humbly believe it is a function of the population involved.

Marketing Analytics was the privilege of the few. Smarts, people, opportunity, desire, data, simply did not exist enough if you think of the last 90 years (or I dare say even 20 years ago). So the few involved in "data" and "optimization" knew and did some very cool things.

If you look at the present the number of people involved in Marketing Analytics is, and I am underestimating, in the millions. The people involved in a higher level of Marketing Analytics is in the hundreds of thousands.

Yet while tools are numerous, and even free, there is no avenue for us the "masses" with access to data to learn and be smarter about it. There are hardly any university degrees, certainly not digital ones, that teach this savvy.

So you and I have to take on this deeply delightful task to share the good word, to elevate the understanding of what's possible, to take us all to a better place. That job's just starting, even if the knowledge of it is rooted in things we knew 90 years ago!

Avinash.

Wonderful topic Avinash! :)

I knew Kevin is a passionate advocator of the hold out test and it's awesome seeing Jim, Kevin, and yourself giving us insight into your thinking on this. I just saw an article that perhaps give us some hope that a data educated analytics and management workforce may be on the way (http://gigaom.com/collaboration/prepare-to-fill-one-of-1-5m-data-savvy-manager-jobs/).

Totally agree that design of the experiement is so important to draw the right conclusion. I work for a large enterprise and we have these crazy smart stat/data people, but sometimes certain hold out test might not be practicle (e.g. national media campaign is involved). However difficult it is though, it's definitely a very powerful and clean way to figure out the efficiency of different marketing vehicles.

Also whole heartedly agree with this TED presentation by mathematician and magician Arthur Benjamin in support of a statistics focused education instead of a mathematics focus. I definitely wish I took more stat classes instead of calculus!

http://www.ted.com/talks/arthur_benjamin_s_formula_for_changing_math_education.html

I fully agree that formal testing is the only reliable way to measure incremental results. But, as you briefly mention in your section on removing all promotion, the immediate impact of a test can be misleading. Permanently discontinuing catalog promotions might well harm long-term email results. It's not enough to say "you can test this too", knowing that many people will simply seize on the short term result and not bother with the long-term test.

A responsible analyst will ensure that her initial test includes long-term tracking and be sure that all reports reference both short- and long-term results. This is partly to ensure the test doesn't lead to bad decisions, and partly to avoid a critique that uses lack of long-term results to question the validity of the entire test process.

David: I concur with you on the likely implications of completely shutting catalogs down, this test is not meant to validate that hypothesis one way or the other. The goal is to simply identify what the incrementality currently is (for the test group customers) of doing both email and catalog (or either one only).

Even with the poor reading of the test my hope is that you would shut catalogs down only if the cost of running the catalog program (people, process, systems etc – stuff not accounted for in cost) is greater than incremental Net Impact to the business.

A lack of long term measurement is often cited as the reason to keep the status quo (long after its value has elapsed). You are so right to point that out.

Thanks so much for sharing your valuable feedback.

Avinash.

Another good post.

Apart from length of article, i liked it a lot.

The way I would look at these results, if I were advising the decision maker, is that the incremental impact of the catalogue on revenue is actually pretty impressive. It doubles revenue over having no marketing ($10/$5) and raises it 41% over email alone ($12/$8.50). So it's not a matter of the traditional media having less impact than the digital media. People are apparently not just throwing the catalogue in the trash. The problem is that the traditional media costs so much more than email to produce and deliver. So I'd wonder if something like that impact could be maintained for lower cost. Maybe the catalogue could be slimmed down by half. Maybe it only needs to be a circular. Maybe we only need to mail a piece of colored paper that reinforces the items featured in the email. Etc…

One of the things the results make me wonder is what the people are actually doing. How many people do more with the catalogue than throw it in the trash? Why does it have an incremental impact over email? Is it because those who engage with the catalogue expand reach – it gets to people who don't open the email? Is it because there's a synergistic effect on purchase if you're exposed to both catalogue and email? Or does it expand the range of our merchandise, with those engaged with the catalogue buying more things from us, different things than the email-exposed people do? Answers to these questions may help decide what to do with the catalogue to maintain its positive impact on revenue while cutting its cost.

Internal data on the email side (open, click through, conversion and on what items) might also be helpful.

I don't know if I'm asking for too much information here and just creating soup. But I'm fishing for the whys behind the picture because I think they may be helpful for decision making.

Chris: It is very very important to state that there is no agenda at play here. The goal is simple: Find an unbiased as clean as possible way to identify incrementality added to the bottom-line of the company.

Whether the answer comes out to be something we like or not is besides the point.

I would also very strongly encourage you to think about the last row instead of the first. A lot of revenue that filters down to no impact on the company's bottom-line is a waste of everyone's time.

Finally… I do concur with you that we should seek to understand why something happens, not just that it does. I would go into that process with no biases. I think we both agree on that. :)

Avinash.

Another stellar post. I'm glad you reiterated how much you like the "net" – this is something that many people overlook and that can be an absolute killer. And we analytics/online marketing folks are often as guilty as anyone.

We can't fall in love with experimentation and data just for experimentation and data. When it's all said and done, we're running these tests and mining all the data to find truth that will ultimately make a positive difference in the bottom line of our companies.

The Net helps us remember that. Thanks again for a killer post.

Doug

Cris, I think the post is more about properly structuring the analytical approach rather than looking for answers to the questions created by the results. The fact sales are generated from people who receive none of the programs is something web analysts have to face sooner or later. Most importantly, BI people already play by these "incremental" rules, so WA will have to get on board.

I am surprised by people not understanding the importance of this incremental concept – if the web is interactive, if "pull" actually works, why wouldn't sales occur when no programs are present? All this talk about customer-centricity and so forth – isn't "pull" the point of these ideas? But then, people ignore measuring the actual effects of the "pull" concept? This is how to measure the value of "pull"!

Last, on the comment specific to "fixing" the catalog, all your ideas might play into the solution, but the most important is segmentation / targeting, and this too plays into "pull", or more accurately, lack of pull. When the online "pull" begins to soften and fail (weeks since last purchase expanding), you have to "push" again, and catalog is a great way to do that. Also, careful merchandise segmentation can produce incremental effects, as well as geography. Only then, on to cost cutting.

It's all about right message, right person, right time. Now, most of what passes as "web marketing" is send all the messages to all the people all the time.

This is a tremendous waste of resources, as Avinash points out.

Recently, i made Multivariate testing of page optimization by google website optimizer, it is simple and funny. But the test of marketing profolio needs plenty of resources, a little bit hard to launch.

Great post. Couple questions.

1. Is you example of controlled experiment done in real life? i.e this was a true experiment or else illustration?

If it was true experiment then what was the duration of the experiment? I am particularly interested in the experiment where there was no marketing done. It is interesting to see over a period of time how this would trend.

2. What is the significane of "Pythagorean theorem" in here?

Raghu: The duration will depend until the test and control results received statistical significance. The duration would vary for each experiment.

The pythagorean theorem image was simply a representation of something complex and beautiful. A visual aid. (And it is one of my favorite theorems! :)).

-Avinash.

I am trying to build a multi-channel attribution model where I want to measure the effectiveness of campaigns across media channels( TV, Radio, Print, Online) which may run during a common period.

I have also accounted for the decay effect using ad stocking in the vehicles. I wish to measure the effectiveness of each campaign which cater to different product segments. I am also using Sales as the dependent variable instead of queries or footfall.

How do I include the effect of all the vehicles and measure the effectiveness of each of the initiative?

Priyotosh: For the type of situation you are describing, in my humble experience a multi-channel attribution analysis model might still leave you short of target because we don't know too much about too many things.

An optimal model is to use multi-channel controlled experiments to create test cells for isolating the impact of various channels. It is more difficult to accomplish, but getting good answers often takes that.

Avinash.

Hi Avinash,

Now that Google have ramped up the proportion of not provided data to over 75%, (see http://searchengineland.com/post-prism-google-secure-searches-172487), is it even possible to calculate the incremental benefit of ppc over organic for a set of search goals anymore?

It rather kills multitouch attribution for sets of search expressions, too. Organic searches reported from GWT are limited to the first couple of thousand (and in my experience are dominated by branded searches for our own site brands).

Google should have more confidence in its advertising model, and make the incremental improvement calculable! Otherwise we're back to the bad old days of 'wasting half of our advertising spend, and never knowing which half.'

I would be very interested in any insight you can shed on this.

Liam: I'm sketching out a post on the latest development with encrypted search, so I'll leave all the details to that one.

But a key part of that post is that Controlled Experimentation is going to be even more important now for SEO than it was in the past. We had every piece of data we wanted, now we don't, so we have to fall back to techniques like Media Mix Modeling that we learned to identify how much of our TV budget is wasted. :)

It will mean SEOs developing new skills, it will be tough but rewarding.

Avinash.

This is still an issue.

In our company, we have decided that the online marketing department can't handle all these different sources of traffic, and therefore we are experimenting with deviding the marketing department into sub-departments focusing on solo PPC, solo SEO etc.

This means that the individual department are only responsible for the revenue generated by their traffic source including retargeting.

So far, we have seen an increase in volume – but net profit has declined.

Thanks for a great post.

Mobilabonnement

Excellent post, Avinash!

I have used controlled experiments in non-digital marketing (it's still alive!) for many years, and it is by far one of the most powerful and simple to use tool in the toolbox. It can be used for different tasks, from measuring effectiveness of an individual campaign to more complex estimates of the channel effectiveness.

Over the years I have heard a lot of objections to the method, mostly from people who stand something to lose from its implementation, and quite honestly, they never have any material effect on the conclusions. When implemented correctly, controlled experiments are extremely resilient to all sorts of external market influences, and rightfully so – they are designed to control for them, after all!

I am very glad that digital marketers are starting to pay attention to analytical methods in assessing incremental impact. This is a great one!

Hi Avinash,

Very interesting and a great post.

Agree with Shilpa that there were tests/experimental design in offline marketing/channels/funnels etc. Now we see them in digital marketing.

In the example though this statement is interesting "It turns out if you completely stop marketing, and you are an established company, the impact is not that your revenue goes to zero!"

The operative phrase is " you are an established company". Now how the heck did it become an established company? Was there no marketing/advertising or some communications about it before this experimentation/analysis?

In an increasingly competitive world, the company that invests in analysis and refines its marketing, is the one that ultimately wins.

Avinash,

Thanks you're a star. Your posts manage to cut through all the bullshit and get to the bare bones. This agile marketing is becoming far more commonplace now, and it certainly delivers results. I actually wrote a recent article on it if you're interested (rebelhack.com/2015/09/10/the-death-of-the-digital-marketing-agency).

But my question is this… if you are running experiments, both in channel and with resource and budget allocation how can you account for the length of the buying cycle?

For example, let's say that I am running weekly SCRUMS, and my teams are busy running experiments in channels, and I am busy moving budgets to find the optimal outcome how can we measure the impact if the customer buying cycle is 7 weeks? Should my Sprints (and therefore SCRUMS) actually be 7 weeks in length?

Thanks. :)

Logan

Logan: Most experimentation platforms work based on sessions (visits). This means that they will only count an outcome as a success if it happens in one session. If it takes longer, the experiment will mark that visit as a failure (and if an outcome happens in the future, it will count that visit, and that one only, as a success).

I share that as context to say that indeed you should look at the Time Lag and Path Length reports (in the Multi-Channel Funnels folder) and take the customer behavior to conversion into account into consideration as you measure success for your experiments. But. You have to make sure your experimentation platform (yours own or from a tools company) can measure what needs to be measured. :)

Avinash.

Avinash, Thanks for coming back to me.

I am not sure that I articulated my question well, so I will try again :)

I am reallocating budgets every week, based on analysis of the attribution models; first and last as end point comparisons and then time decay and position based. This helps inform my decision making.

The buying cycle is 7 weeks. The time path to the main event i am tracking online is 12 days (90% of those events i want happen in under 12 days).

If i am reallocating budgets every week (and not every 12 days), am I infact not following the scientific method and moving only one variable at a time? My concern is that by moving budgets every 7 days, I am in fact changing a input variable every 7 days which will skew the results.

Should I be moving budgets every 12 days to compensate?

Another brick in the wall of impact-full tips Avinash!

Can anyone suggest a way to go incremental testing for view-through conversion campaigns on different channels like Facebook and DoubleClick Ad Network?

Would appreciate your feedback.

Thanks

Sparsh: Check with the tool you are using (DoubleClick and Facebook), they should have the ability to do this testing for you built in already or absolutely an ability to allow you to create tests with targeting that will allow you to measure the effectiveness of each campaign in driving view-thru conversions.

Avinash.

Yes, they have tools to do that on their individual platforms. But when I try to measure how do each of the channels contribute to view-through conversions when running campaigns on both platforms simultaneously, there seems to be a lot of double counting. And since DoubleClick doesn't support impression tracking on Facebook, I can't get a clear picture of the scenario!

Curious – more than curious actually, have you worked with or know of any case studies where MLM – multi level marketing aka, network marketing is the business model?

This environment makes it nearly impossible – no impossible, to conduct such a channel campaign or program. Would you agree?

Thoughts are welcomed.

Karen: I'm sure there are some experiments that might be a bit harder to execute in a MLM environment. I am confident though that most of the questions you are looking to answer that can be answered using controlled experiments.

The optimal path might be to hire a consultant with experience, they'll be able to share the full cluster of possibilities.

Avinash.

What method would you use to do a controlled experiment for value of brand search?

(Thinking isolating a specific geo and comparing results, or…?)

Matthew: Do you mean paid brand search? If so, you can use Adwords Drafts and Experiments.

If you want to understand value of brand search in context of your other campaigns (email or tv or display), then you can set up matched market tests.

If you mean organic brand search, you can measure conversion rate for brand search easily. Over time isolating for certain keywords you did not rank well for and at the same time rank well for, you can compare and contrast performance.

Hope this helps.

Avinash.

A typical question that comes in when you do these type of analysis is the level of bias that may be involved. for example, are we able to track the user's journey to shopping more effectively via online pixels vs offline results, which may not be 100% accurate?

How do you speak to the biases involved in such experiments and what do you do control them?

Bala" You are right, there will be variations that we have to account for to the best of our ability.

One way in which we create our experiments is to create test and control markets to account for as many variables as we can. For example, current market penetration, audience type, competitor presence, number of retail store partners, Google search behaviour for certain cluster of queries, current marketing spend, etc. etc. We also don't pick just one test and one control market, which allows for more data.

Matching markets to run controlled experiments is an art, to which we apply as much science as possible. Then, we learn.

Avinash.