We just held our ninth session of our new national security class Technology, Innovation and Modern War. Joe Felter, Raj Shah and I designed a class to examine the new military systems, operational concepts and doctrines that will emerge from 21st century technologies – Space, Cyber, AI & Machine Learning and Autonomy.

Today’s topic was Autonomy and Modern War.

Catch up with the class by reading our summaries of the previous eight classes here.

Some of the readings for this class session included Directive 3000.09: Autonomy in Weapons Systems, U.S. Policy on Lethal Autonomous Weapon Systems, International Discussions Concerning Lethal Autonomous Weapon Systems, Joint All Domain Command and Control (JADC2), A New Joint Doctrine for an Era of Multi-Domain Operations, Six Ways the U.S. Isn’t Ready for Wars of the Future.

Autonomy and The Department of Defense

Our last two class sessions focused on AI and the Joint Artificial Intelligence Center (the JAIC,) the DoD’s organization chartered to insert AI across the entire Department of Defense. In this class session Maynard Holliday of RAND describes the potential of autonomy in the DoD.

Maynard was the Senior Technical Advisor to the Undersecretary of Defense for Acquisition, Technology and Logistic during the previous Administration. There he provided the Secretary technical and programmatic analysis and advice on R&D, acquisition, and sustainment. He led analyses of commercial Independent Research and Development (IRAD) programs and helped establish the Department’s Defense Innovation Unit. And relevant to today’s class, he was the senior government advisor to the Defense Science Board’s 2015 Summer Study on Autonomy.

Today’s class session was helpful in differentiating between AI, robotics, autonomy and remotely operated systems. (Today, while drones are unmanned systems, they are not autonomous. They are remotely piloted/operated.)

I’ve extracted and paraphrased a few of Maynard’s key insights from his work on the Defense Science Board Autonomy study, and I urge you to read the entire transcript here and watch the video.

Autonomy Defined

There are a lot of definitions of autonomy. However, the best definition came from the Defense Science Board. They said, to be autonomous a system must have the capability to independently compose and select among different courses of action to accomplish goals based on its knowledge and understanding of the world, itself, and the situation. They offered that there were two types of Autonomy:

- Autonomy at Rest – systems that operate virtually, in software, and include planning and expert advisory systems. For example, in Cyber, where you have to react at machine speed

- Autonomy in Motion – systems that have a presence in the physical world. These include robotics and autonomous vehicles, missiles and other kinetic effects

A few definitions:

AI are computer systems that can perform tasks that normally require human intelligence – sense, plan, adapt, and act, including the ability to automate vision, speech, decision-making, swarming, etc. – Provides the intelligence for Autonomy.

Robotics provides kinetic movement with sensors, actuators, etc., for Autonomy in Motion.

Intelligent systems combine both Autonomy at Rest and Motion with the application of AI to a particular problem or domain.

Why Does DoD Need Autonomy? Autonomy on the Battlefield

Over the last decade, the DoD has adopted robotics and unmanned vehicle systems, but almost all are “dumb” – pre-programmed or remotely operated – rather than autonomous. Autonomous weapons and weapons platforms—aircraft, missiles, unmanned aerial systems (UAS), unmanned ground systems (UGS) and unmanned underwater systems (UUS) are the obvious applications.

Below is an illustration of a concept of operations of a battle space. You can think of this as the Taiwan Straits, or near the Korean Peninsula.

On the left you have a joint force; a carrier battle group, AWACS aircraft, satellite communications. On the right, aggressor forces in the orange bubbles are employing cyber threats, dynamic threats, denied GPS and comms (things we already see in the battlespace today.)

On the left you have a joint force; a carrier battle group, AWACS aircraft, satellite communications. On the right, aggressor forces in the orange bubbles are employing cyber threats, dynamic threats, denied GPS and comms (things we already see in the battlespace today.)

Another example: Adversaries have developed sophisticated anti-access/area denial (A2/AD) capabilities. In some of these environments human reaction time may be insufficient for survival.

Autonomy can increase the speed and accuracy of decision-making. Using Autonomy at Rest (cyber, electronic warfare,) as well as Autonomy in Motion, (drones, kinetic effects,) you can move faster than your adversaries can respond.

Autonomy Creates New Tactics in the Physical and Cyber Domains

The combatant commanders asked the Science Board to assess how autonomy could improve their operations. The diagram below illustrates where autonomy is most valuable. For example, in row one, on the left, you don’t need autonomy when required decision speed is low. But as the required decision speed, complexity, volume of data and danger increases, the value of autonomy goes up. In the right column you see examples of where autonomy provides value.

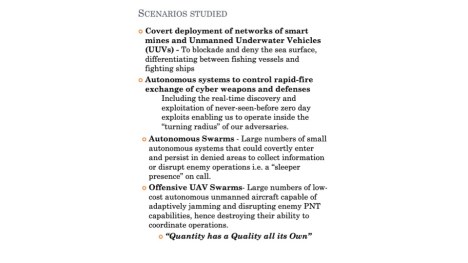

The Defense Science Board studied several example scenarios.

Some of these recommendations were invested in immediately. One was the DARPA OFFSET (Offensive Swarm Enabled Tactics) program run by Tim Chung. He holds the record for holding a hundred swarms. And he took his expertise to DARPA to run a swarm challenge. Another DARPA investment was the Cyber Grand Challenge, to seed-fund systems able to search big data for indicators of Weapons of Mass Destruction (WMD) proliferation.

Can You Trust an Autonomous System?

A question that gets asked by commanders and non-combatants alike is, “Can you trust an autonomous system? The autonomy study specifically identified the issue of trust as core to the department’s success in broader adoption of autonomy. Trust is established through the design and testing of an autonomous system and is essential to its effective operation. If troops in the field can’t trust that a system will operate as intended, they will not employ it. Operators must know that if a variation in operations occurs or the system fails in any way, it will respond appropriately or can be placed under human control.

DOD order 3000.09 says that a human has to be at the end of the kill chain for any autonomous system now.

Postscript – Autonomy on the Move

A lot has happened since the 2015 Defense Science Board autonomy study. In 2018 the DoD stood up a dedicated group – the JAIC – the Joint Artificial Intelligence Center, (which we talked about in the last two classes here and here) to insert AI across the DoD.

After the wave of inflated expectations, deploying completely autonomous systems to handle complex unbounded problems are much harder to build than originally thought. (A proxy for this enthusiasm versus reality can be seen in the hype versus delivery of fully autonomous cars.)

That said, all U.S. military services are working to incorporate AI into semiautonomous and autonomous vehicles into what the Defense Science Board called Autonomy in Motion. This means adding autonomy to fighters, drones, ground vehicles, and ships. The goal is to use AI to sense the environment, recognize obstacles, fuse sensor data, plan navigation, and communicate with other vehicles. All the services have built prototype systems in their R&D organizations though none have been deployed operationally.

A few examples; The Air Force Research Lab has its Loyal Wingman and Skyborg programs. DARPA built swarm drones and ground systems in its OFFensive Swarm-Enabled Tactics (OFFSET) program.

The Navy is building Large and Medium Unmanned Surface Vessels based on development work done by the Strategic Capabilities Office (SCO). It’s called Ghost Fleet, and its Large Unmanned Surface Vessels development effort is called Overlord.

DARPA completed testing of the Anti-Submarine Warfare Continuous Trail Unmanned Vessel prototype, or “Sea Hunter,” in early 2018. The Navy is testing Unmanned Ships in the NOMARS (No Manning Required Ship) Program.

Future conflicts will require decisions to be made within minutes, or seconds compared with the current multiday process to analyze the operating environment and issue commands – in some cases autonomously. An example of Autonomy at Rest is tying all the sensors from all the military services together into a single network, which will be the JACD2 (Joint All-Domain Command and Control). (The Air Force version is called ABMS (Advanced Battle Management System).

The history of warfare has shown that as new technologies become available as weapons, they are first used like their predecessors. But ultimately the winners on the battlefield are the ones who develop new doctrine and new concepts of operations. The question is, which nation will be first to develop the Autonomous winning concepts of operation? Our challenge will be to rapidly test these in war games, simulations, and in experiments. Then take that feedback and learnings to iterate and refine the systems and concepts.

Finally, in the back of everyone’s mind is that while DOD order 3000.09 prescribes what machines will be allowed to do on their own, what happens when we encounter adversaries who employ autonomous weapons that don’t have our rules of engagement?

Read the entire transcript of Maynard Holliday’s talk here and watch the video below.

If you can’t see the video click here

Lessons Learned

- Autonomy at Rest – systems that operate virtually, in software, and include planning and expert advisory systems

- For example, Cyber, battle networks, anywhere you must react at machine speed

- Autonomy in Motion – systems that have a presence in the physical world

- Includes robotics and autonomous vehicles, missiles, drones

- AI provides the intelligence for autonomy

- Sense, plan, adapt, and act

- Robotics provides the kinetic movement for autonomy

- Sensors, actuators, UAV, USVs, etc.

- Deploying completely autonomous systems to handle complex unbounded problems are much harder than originally thought

- All U.S. military services are working to incorporate AI into semiautonomous and autonomous vehicles and networks

- Ultimately the winner on the battlefield will be those who develop new doctrines and new concepts of operations

- We’re seeing this emerge on battlefields today

Filed under: National Security, Technology Innovation and Modern War |

Leave a Reply