5-Star Feedback Systems are Broken

In the early days of 2004 at oDesk, we had no feedback system. I answered hundreds of phone calls from customers. I would always ask for their feedback on what we could do better. Almost all of them told me their great idea - “You should launch a feedback system! Like eBay!”

So, we got it. We desperately needed a feedback system. We had Mary Lou Song (1st eBay employee) as an advisor. We studied other systems like eBay and RentaCoder. Ian Ippolito, founder of RentaCoder, deserves a lot of credit for building some very interesting elements into his feedback system which is now in place at vWorker. Elance had a 5-star feedback system, RentaCoder had a 10 point scale that had text descriptions for each point, things like “Good deliverables, but not on time.” eBay had a variety of different feedback mechanisms, but most of it started as A++++++++++++ and then evolved into % positive vs % negative (essentially a simple thumbs up or down).

When we set out to design oDesk’s system, we had no idea how some of our early decisions would become absolutely critical in shaping the marketplace. I distinctly remember one night debating until 11 pm whether or not people should receive default feedback scores when they were first getting started. Thankfully, we didn’t give out defaults but we did make feedback mandatory in the beginning. As with many things at oDesk, we debated each seemingly small aspect of the system very intensely for weeks.

This is what we ended up with:

- 5 star feedback system

- Composed of 6 different categories, eg Deliverables, Communication, Attitude

- 360 Feedback - buyer rates seller and vice versa

- Dollar weighted feedback

- All feedback scores are public

- Text comments could be hidden by recipient

- Mandatory feedback at end of job

- Cannot see the feedback you’ve received until you also provide feedback

- A user can change a feedback score once and only once if allowed to do so by the other party.

I’m proud of the system we built since it has led to a virtuous cycle – feedback scores are always increasing. However, when I look back with the benefit of seven years of results and experience, there are definitely a lot of things I would have done differently. And I know there are many things that are broken about current 5 star feedback systems.

Let’s look at some of the problems with typical 5-star feedback systems today.

False Sense of Security

What if I told you that almost all of the books on Amazon have top feedback scores? Doesn’t seem all that useful, right? Take a look at the numbers below. There are a grand total of 140k items in this category. 99k out of 140k are in the highest feedback filter - 4 stars and up. The problem with this is that it leads to a false sense of security. You may think it’s a highly rated product. In reality, it’s just not one of the worst 20% of products.

The Law of Averages

Take a look at rating distribution charts on Yelp. Most of them look pretty similar. Unfortunately, that means that the simple average of these distributions pretty much always equals 4.0 stars. More interesting perhaps is to look at % 5 star rating or maybe simply total # of reviews.

I examined the scores at Amazon, Yelp, and oDesk and display the distribution of feedback scores below. With the exception of Yelp which is 4-centered, everything else is 5-centered. More importantly, about 90% of all the results fall in the top feedback tier across all of these marketplaces. So, the next time someone says we have over a 90% customer satisfaction rate, consider that 90% is just plain average.

Feedback Inflation

This is one of the trickiest issues. Most people in the world are:

a) generally nice, and b) avoid confrontation. The unfortunate results of a + b = c, feedback inflation. This issue is especially prevalent in oDesk, where feedback is public and personal. In the cases where you may want to leave negative feedback, it may not be worth it to you because you know that you’ll be insulting someone publicly. In oDesk’s case, it literally could mean the end of their career. I’ve heard stories of people leaving a negative feedback score and then being pestered with 38 consecutive emails demanding that they change the feedback score. Sites should consider making some more aspects of the feedback and reputation systems private to avoid these sort of issues.

Categorical Feedback Breakdown

Some sites allow users to rank something on a few different attributes like price, value, service, etc. These breakdowns are fairly useless. There is almost no variability in the scores of each category. People either love something or they don’t.

Bimodal Distribution

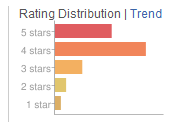

Most feedback systems are optional. Therefore, the user needs to be motivated to leave feedback for some reason. Mobile developers know this well and are getting pretty savvy about the timing when they pop up a little notification asking for a rating. They only do that after the user has become engaged and clearly is enjoying the app. The issue is also well known to the NPS community - there are promoters, passives, and detractors. Passives are the ones that rate you in the middle, and well, they tend to be passive. What that means is that you end up with a fair number of 1s, almost no 2s or 3s, a big number of 4s, and a huge number of 5s.

So, what is the solution:

I really don’t know, but I think the future of feedback and reputation systems will be:

1) More social - let me see what friends recommend

2) More authoritative - apply Page Rank principles to feedback system users

3) More objective - let me see behavioral data like return rates, engagement rates, etc

mochimagoo liked this

jbreinlinger posted this

jbreinlinger posted this